여기서 말하는 tensor는 pytorch tensor임.

변환방법

1. 바닐라 방법(제일 좋은듯)

def image_tensor_to_numpy(tensor_image):

# If this is already a numpy image, just return it

if type(tensor_image) == np.ndarray:

return tensor_image

# Make sure this is a tensor and not a variable

if type(tensor_image) == Variable:

tensor_image = tensor_image.data

# Convert to numpy and move to CPU if necessary

np_img = tensor_image.detach().cpu().numpy()

# If there is no batch dimension, add one

if len(np_img.shape) == 3:

np_img = np_img[np.newaxis, ...]

# Convert from BxCxHxW (PyTorch convention) to BxHxWxC (OpenCV/numpy convention)

np_img = np_img.transpose(0, 2, 3, 1)

return np_img

def image_numpy_to_tensor(np_image):

if len(np_image.shape) == 3:

np_image = np_image[np.newaxis, ...]

# Convert from BxHxWxC (OpenCV/numpy) to BxCxHxW (PyTorch)

np_image = np_image.transpose(0, 3, 1, 2)

tensor_image = torch.from_numpy(np_image).float()

return tensor_image

2. albumentations에서 tensor -> PIL image로 바꾸는걸 사용하는 방법

(나중에 추가하겠음.)

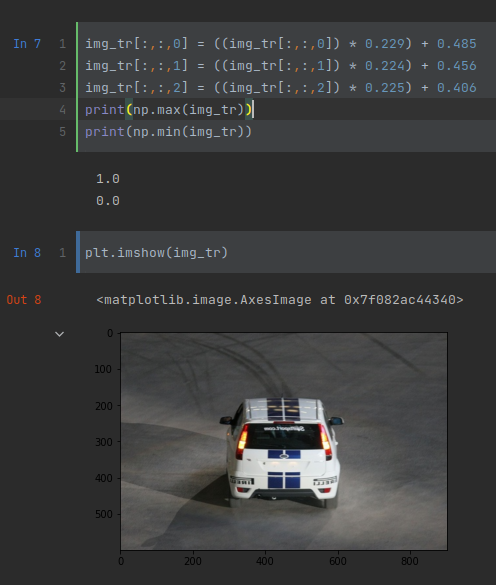

3. 직접 바꾸기

답답해서 직접 만든다 아오..

https://pytorch.org/vision/main/generated/torchvision.transforms.Normalize.html

Normalize — Torchvision main documentation

Shortcuts

pytorch.org

torchvision normalize 수식 보면 이래 설명됨.

주의할건 우리가 알고있는 이미지는 r,g,b로 [0~255] 이지만 torchvision의 input 이미지가 애초에 rgb [0~1]로 가정하고 만들어진 듯.

그래서 저 수식 반대로 하면 된다.

def inverse_normalize(img, mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]):

"""

:param img: numpy array. shape (height, width, channel). [-1~1]

:return: numpy array. shape (height, width, channel). [0~1]

"""

img[:,:,0] = ((img[:,:,0]) * std[0]) + mean[0]

img[:,:,1] = ((img[:,:,1]) * std[1]) + mean[1]

img[:,:,2] = ((img[:,:,2]) * std[2]) + mean[2]

return img

'기술 > Computer Vision' 카테고리의 다른 글

| self-supervised learning (0) | 2022.01.17 |

|---|---|

| conv1d, conv2d, conv3d (0) | 2021.11.01 |

| 랜덤 샘플 이미지 (0) | 2021.10.06 |

| image normalize, inverse normalize (0) | 2021.09.16 |

| 컴퓨터비전 방법론들 (0) | 2021.08.01 |